Non-realtime playback

The Image Sequence Player allows you to play Combined Per Pixel image sequences directly in Unity. This can be valuable for those looking to playback a higher resolution without encoding a video or when rendering your scene with the Unity recorder.

In this page

Getting started →

Setting up the Image Sequence Player in Unity →

Rendering Depthkit Studio assets to 3D-360 video from Unity →

- Preparing Depthkit assets for offline rendering →

- Unity setup →

- Encoding 360 video →

- Embedding spatial audio →

Getting started

- Unity 2019.4.x (LTS) or 2020.3 (LTS)

- Unity's Streaming Image Sequence Plugin (for version 2019, use 0.3.2-preview; for version 2020, use 0.16.0-preview).

If the Unity Streaming Image Sequence packages are hidden:

- In Project Settings > Package Manager, enable Preview Packages.

- In Package Manager, click the + and "Add package from git URL..." then enter the appropriate URL for your package (e.g. [email protected] ).

- Depthkit Core Expansion Package Phase 8 or newer OR Depthkit Studio Expansion Package Phase 5 or newer

For Depthkit Studio Expansion Package Phase 5 & 6, this also requires the experimental supplement packages to the standard Studio Expansion Package, containing the Mesh Sequence Export plugin and the Depthkit Image Sequence Player Plugin. Contact[email protected] to get access. - A Depthkit Core/Cinema or Depthkit Studio Combined Per Pixel image sequence exported directly from Depthkit.

Create a new Unity project and import your desired Depthkit Studio Expansion packages as outlined in Depthkit Studio Expansion.

Setting up the Image Sequence Player in Unity

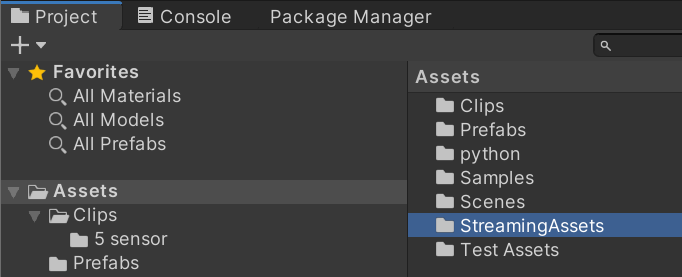

- Create a StreamingAssets folder in your Assets directory in Unity.

- Import your metadata file into your Assets folder. Import the Combined Per Pixel image sequence into the StreamingAssets folder. This may take some time to import for larger sequences.

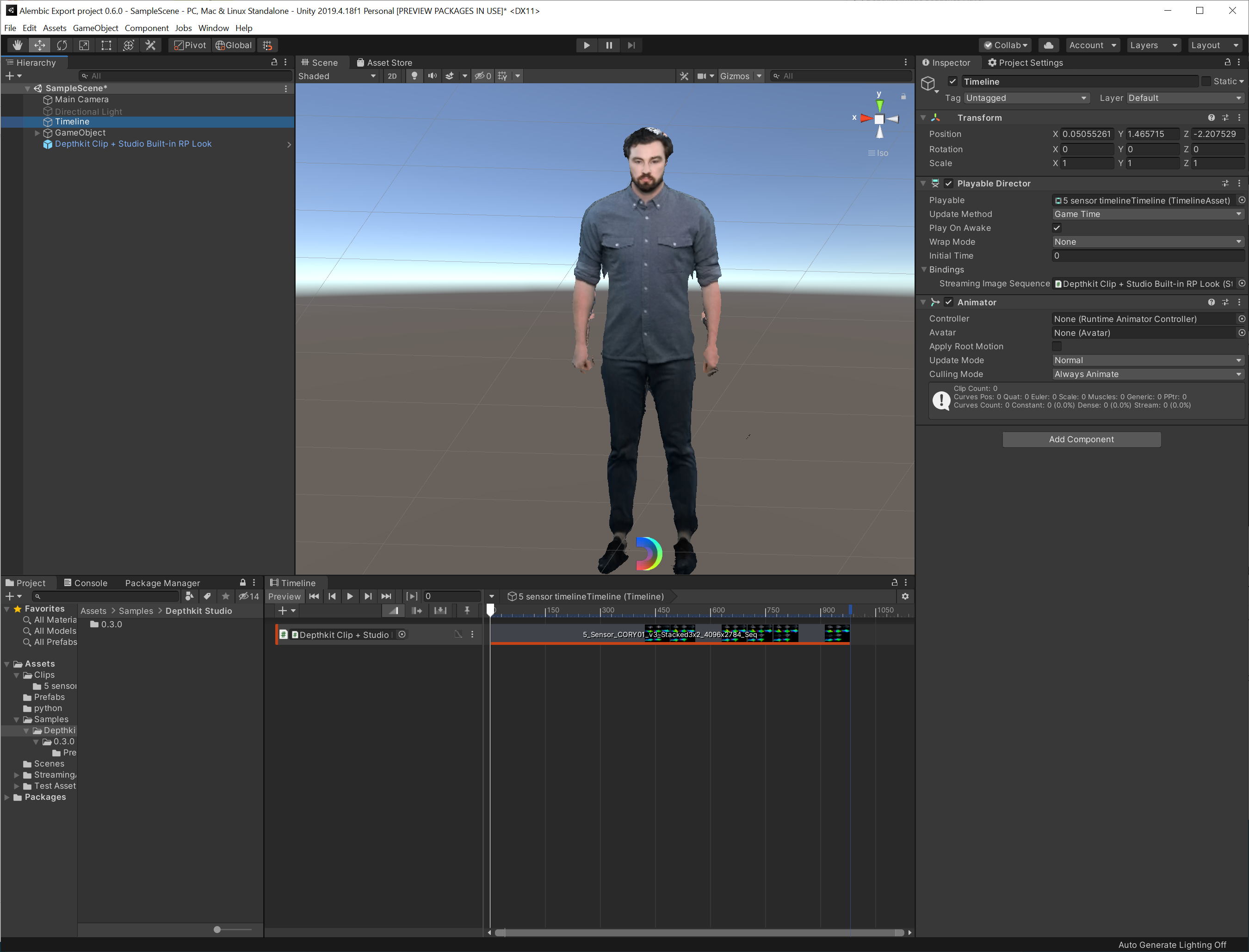

- Locate your preferred Depthkit Studio prefab under Assets → Samples and drag it into the Hierarchy.

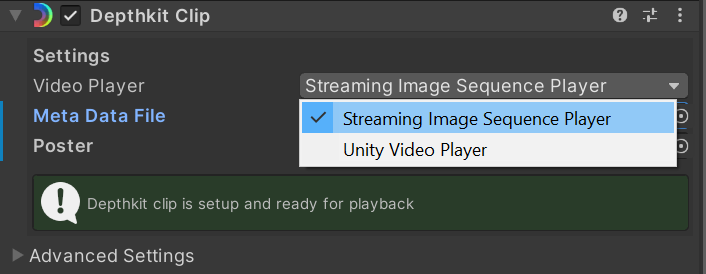

- In the Depthkit Clip component, select the video player dropdown menu and select the Streaming Image Sequence Player.

- Set up your Depthkit clip by linking the metadata file in the same component.

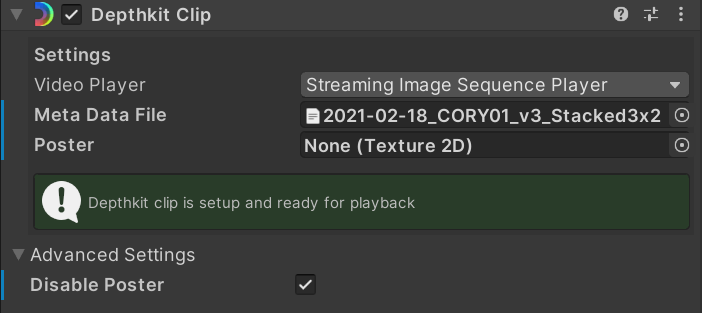

- Open the Advanced clip settings to disable the poster frame.

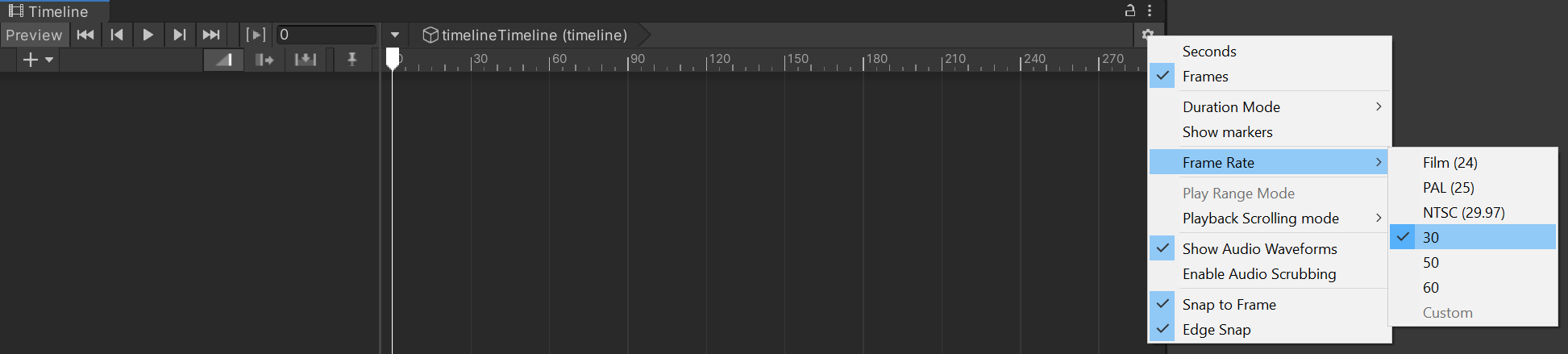

- Create an empty game object and create a Timeline asset. Ensure the timeline is is set to 30 FPS under your Timeline settings.

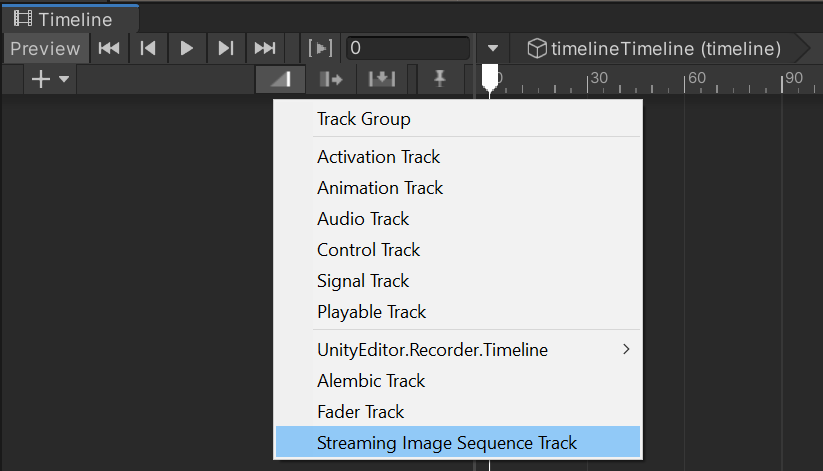

- Add a streaming image sequence track to the Timeline.

- Drag your image sequence from the streaming assets folder onto your new track.

By default, the track falsely interprets the length of your sequence.Ensure the number of frames of the duration of the clip matches the number of frames in your sequence. To correct the duration, drag the end of the clip in-ward until you reach the correct number of frames.

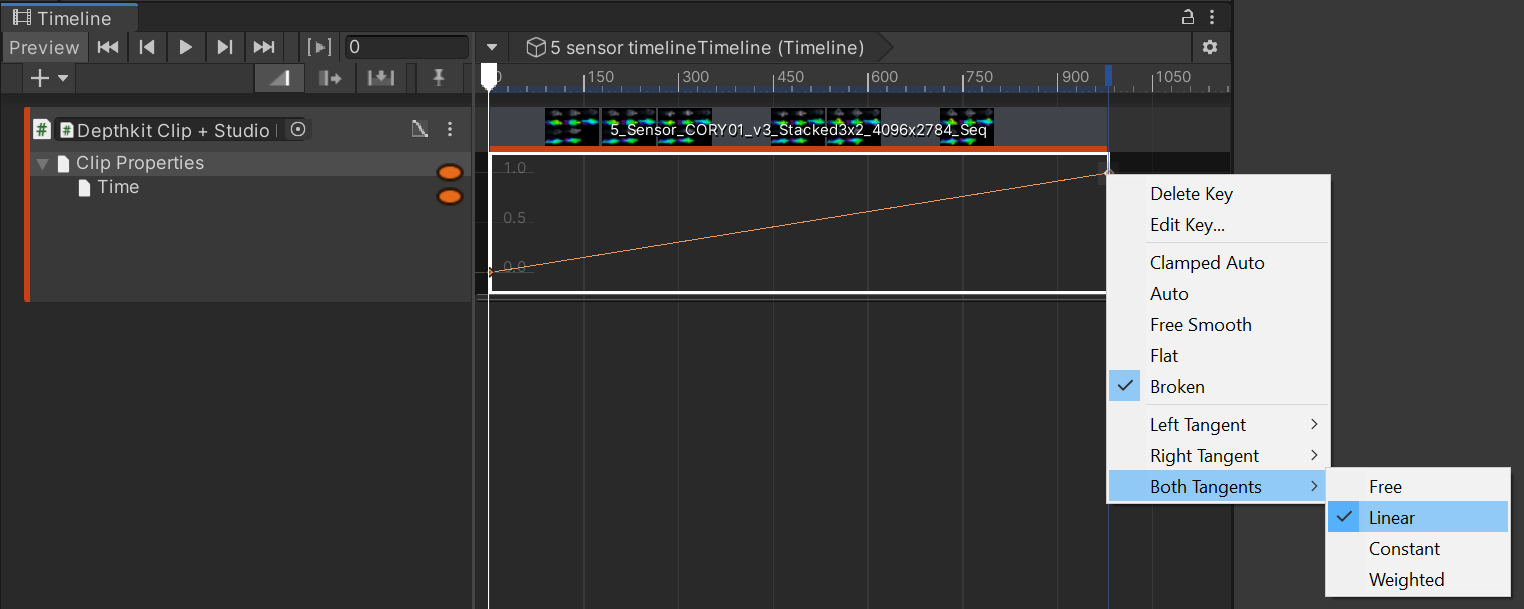

- The Timeline defaults to Clamped Auto which automatically applies a curve to your clip. To remove this curve, right click the tangents and set both tangents to linear.

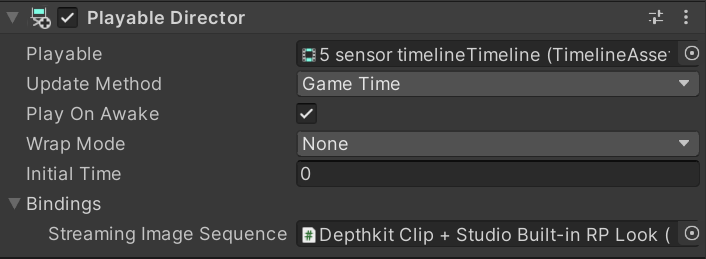

- Drag the playable asset on the Playable Director created on your Depthkit clip. A binding foldout should appear at the bottom of the Playable Director, open it and drag or select the game object that has your Depthkit clip.

- Now your clip will play back as an image sequence!

Rendering Depthkit Studio assets to 3D-360 video from Unity

Requirements

- Depthkit Combined-per-Pixel Assets (Video & Metadata)

- Unity 2019.4.x LTS

- Depthkit Studio Expansion Package Phase 6+

- AVPro 2.x

- Unity Recorder 2.5.5+ (Installed from Unity Package Manager)

- Scatter's AV Pro 2.x Timeline Package Mod

Preparing Depthkit assets for offline rendering

The primary difference between real-time-ready assets and those for offline rendering is that the later is no longer bound by the performance optimizations needed for real-time playback, meaning you can use higher-resolution and higher-quality assets. Follow our asset encoding guide for best practices for high-quality assets.

Unity setup

Project setup

Create or open a new project in the pipeline of your choice.

To install AVPro2, open the Asset menu, and click Import Package > Custom Package..., select the AVPro2 Unity Package and click Open.

If you haven't yet done so, add the plugins from the Depthkit Studio Expansion Package Phase 6 in the Package Manager.

depthkit.core[depthkit.studio](http://depthkit.studio)depthkit.avpro- Any of the additional plugins needed for your render pipeline

- Import the appropriate Depthkit Studio prefab for your render pipeline.

Modifying & configuring AVPro 2

In the Project bin, navigate to Assets > AVProVideo > Extensions > Timeline and select _AVProVideo.Extensions.Timeline.asmdef

- In the Inspector, under

Assembly Definition References, click the + button. - Click the Object Picker in the newly generated field, and search for

Unity.Timeline(Packages/com.unity.timeline/Runtime/Unity.Timeline.asmdef) and select it.

In Edit > Project Settings, under Player > Other Settings, enter AVPRO_PACKAGE_TIMELINE into the 'Scripting Define Symbols' field. This activates the AVPro 2.x timeline extension.

In the Project Bin in Assets > AVProVideo > Extensions > Timeline, replace the following with our forked versions found in the AV Pro 2.x Timeline Package Mod.

MediaPlayerControlAsset.csMediaPlayerControlBehaviour.csMediaPlayerControlMixerBehavior.csMediaPlayerControlTrack.cs

In the Project Bin in Assets > Editor, add the VideoCaptureSettingsWindow.cs file found in the Editor folder if the AV Pro 2.x Timeline Package Mod. Create the Editor folder in Assets if none exists.

Preparing Depthkit clips

- Add Depthkit clip(s) to the project in Assets > Clips.

- Move the Combined-per-Pixel video(s) into 'StreamingAssets' folder.

- Right-click in the Project Bin in Assets > Clips an click Create > AVProVideo > Media Reference.

- Link the Media Reference to your video clip by selecting the Media Reference, and in the Inspector, clicking "Browse..." and then selecting your video clip.

- Add the Depthkit prefab into the hierarchy, and select it.

- Under the Depthkit Clip component, change Video Player to 'AV Pro Video Player'.

- Drag the the metadata file for your clip into the 'Metadata' field.

- Under 'Advanced Settings', check the box for 'Disable Poster'

- Scroll down to the Media Player component. Click the folder button, and select your Depthkit clip from Media References.

- Disable `Auto Open` and `Auto Play`.Preparing the timeline

Create an empty Game Object. Select it.

In the Timeline pane, click the Create, and save it in your Assets folder.

Create a Media Player Control Track

Create a clip for the track by adding from Media Reference

Choose your Depthkit clip Media Reference from the available references.

Extend region in the timeline to match the full clip.

Drag the Depthkit object in the hierarchy onto the Media Player Control Track to link the Depthkit media player to the Timeline.

With the timeline clip region selected, in the Inspector:

- Enter

0into the Start field. - Enable

Frame Accurate Seekto ensure recorder advances in lockstep with the video on the timeline. - The preload time can be used to load your video clip ahead of time to ensure smooth playback on start. Be sure the media player's GO is enabled at the time the preload hits on the timeline.

To globally apply this setting to all AVPro Playables on a timeline or nested timelines, click the Capture menu made available by the VideoCaptureSettingsWindow.cs script above, and click Video Capture.

Drag the highest level timeline or any timeline with media control asset clips on it into the capture settings window's playable director slot:

Now the list in the widow will say which clips are enabled or disabled for frame accurate playback. Press the Toggle button until they all match your desired playback accuracy. It's best to have them all true or all false, not a mix

true for 360 Rendering

false for Real-Time 6DoF Builds

Preparing Unity Recorder

With your main timeline selected, in the Timeline pane, right-click in the left area of the pane and select UnityEditor.Recorder.Timeline > Recorder Track.

Right-click in the right area of the pane and select Add a Recorder Clip.

Adjust the beginning and end of the Recorder Clip on the timeline to determine which section of your timeline is recorded.

Select the recorder clip on the timeline, and in the Inspector, set the following settings:

- Recorder Clips:

- Recorder: Image Sequence

- Capture

- Source: 360 View

- Camera: Main Camera (or a tagged camera of your choosing)

- Output Dimensions: W 4096 H 4096

- Cubemap Size: 2048 - This should be at least the vertical resolution of each eye. In the case of 4096 x 4096 (stereo top-bottom), this is 2048 as each eye is 4096 x 2048.

- Format

- Media File Format: PNG is higher quality, JPG will produce smaller files.

- Output File

- Path: To prevent Unity from slowing down as it adds each exported frame to the Project, select an output destination outside of your Unity project folder. Exporting to an SSD will go faster than to an HDD. Make sure there's plenty of space on the drive as well.

Note that the 360 recording framerate will be determined by the FPS of the timeline asset itself (this asset must contain the Unity Recorder Clip):

Press Play in the editor to start playback and once this clip beings, the recording will start.

Visual effects and render pipeline considerations

VFX Graph

The Unity Recorder will frame step the application so VFX Graph will always remain in sync.

However, if using particle effects like point sprites or trails billboarded along the camera plane be aware that the billboarding will be in reference to the face of the cubemap it was visible from, not live from the viewer's perspective, meaning that the particles may look slightly tilted and will have sift directions as they cross from one face to another.

Post processing

Some post processing effects will give undesired results when the faces of the cube map are stitched together. Never use vignette or chromatic aberration.

Volumetric Lighting with Reprojection — Using Reprojection with volumetric lights does give a better looking result when running in 6DoF but will cause harsh artifacts when capturing 360. Disable Reprojection for the best results.

Bloom

Bloom is another screen space effect that can cause issues over camera boundaries. If an object contributing to bloom is highly visible near an edge and not visible from an adjacent camera (as in the image below) the bloom with be strong on one cubeface and non-existant on the other causing this harsh line in the 360 capture.

To fix, either dial down bloom settings, disable bloom all together (globally or locally) or attempt a bespoke post processing stack post 360 capture and manually composite.

Encoding 360 video

H.264

Currently, unless we have full control of target hardware, H.264 is still the most widely supported video codec to use. It is not ideal because it only supports 8 bit color and is not designed for the higher resolutions required for 360 video, and is not as efficient as current state of the art codecs like H.265, VP9 or AV1.

The resolutions required for high quality 360 video are technically outside of the H.264 Level 5.2 spec for resolution, however many hardware accelerated devices are able to support these resolutions, but are still constrained to other 5.2 spec limitations, such as motion vector range. For this reason, when encoding high resolution H.264 with ffmpeg it is necessary to force the motion vector range into the appropriate range, as ffmpeg defaults to level 6 specifications at higher resolutions. The out-of-spec motion vector range leads to artifacts at decode time on most decoders.

Encoding with FFMpeg

ffmpeg -r 60 -i ./image_001_%05d.png -vf scale=out_color_matrix=bt709:out_range=full -colorspace bt709 -color_primaries bt709 -color_trc bt709 -color_range pc -c:v libx264 -x264opts mvrange=511 -crf 16 -pix_fmt yuv420p output_360.mp4Command breakdown

-vf scale=out_color_matrix=bt709:out_range=full- Adds the

scalevideo filter, telling it to use the bt709 color matrix and full color range. By default ffmpeg will use the bt601 color space, and limited range (and by default it does not include that in the metadata!). The bt709 color space is a wider gamut than bt601, meaning colors will be better represented, and most decoders assume bt709 if no metadata is present, and some assume bt709 even if metadata is present. Full color range is important to preserve detail in dark and light areas, and helps mitigate color banding in dark gradients.

- Adds the

-colorspace bt709 -color_primaries bt709 -color_trc bt709 -color_range pc- Adds the metadata for the bt709 color space, as well as full color range metadata. Note that these flags ONLY set metadata, but are not involved in the RGB → YUV conversion (that is what the above video filter is for). Again, by default ffmpeg does not include this metadata, so it must be set manually.

-x264opts mvrange=511- Defines the motion vector range parameter, as described above, to stay within the H.264 Level 5.2 spec (below 512). This is critical to avoid decompression artifacts.

-pix_fmt yuv420p- Specifies the YUV 4:2:0 chroma sub-sampling pixel format. By default ffmpeg will use a higher quality 4:2:2 pixel format when possible, though this is not widely supported by many decoders.

H.265

If the target hardware is supports it, H.265 is a much better choice. Currently the Quest 1 and 2 support H.265 natively, but at different resolutions/framerates. The following article lists the hardware capabilities: https://creator.oculus.com/blog/encoding-high-resolution-360-and-180-video-for-oculus-go/

10-bit vs 8-bit color

One of the biggest improvements H.265 has in terms of visual quality is the ability to support higher bit depths than H.264, which is limited to 8 bit YUV. 8-bit video suffers from poor color reproduction in darker areas, and poorer color reproduction in general than 10-bit.

If the target hardware and delivery platform supports it, 10 bit color is much higher quality, however there can be issues with preserving 10 bit color if there are transcoding steps in the delivery platform's pipeline.

Encoding with ffmpeg

ffmpeg -r 60 -i ./image_001_%05d.png -crf 15 -pix_fmt yuv420p10le -color_range pc -c:v libx265 output_360.mp4Command Breakdown

-c:v libx265- Specifies the H.265 encoder via libx265

-pix_fmt yuv420p10le- Specifies a 10-bit YUV 4:2:0 pixel format

- Change this to

yuv420pfor 8-bit encodes

-color_range pc- We've found that with 10-bit color H.265, full color range is important in maintaining the original color. Without this flag, the colors come out too dark.

Embedding spatial audio

This is a tool included with Spatial Workstation that can take 360 videos and spatialized audio and transcode and remux to a few different 360 video formats.

Get the Spatial Workstation (AAX) and (AAX Addons)

On windows, you will need a custom version of FFMPEG, as their installer fails during that step.

Follow these instructions to get it compiled.

Follow these instructions to install Python 2.7 and MP4Box.

Spatial audio

The FB360 Encoder transcodes a number of multi channel spatial audio formats into chosen destination format. This is fairly opaque from the tool and documentation about what the exact channel layouts of the output formats are.

The spatial workstation can output different spatial audio formats. For the highest quality, we recommend using "B-format (3rd order ambiX)" which is a 16-channel .wav file.

In addition to spatialized audio, an optional head-locked stereo .wav file may be provided.

These input 16 or 18 channels are mixed down to a smaller number of channels in the final video, depending on the output format. For the two formats described below, 8.2 TBE is the final channel layout used.

Output formats

Facebook 360 Video

This is the format used by Oculus Media Studio. Note that when deploying to Oculus Media Studio, the video will be re-encoded to a few different versions in order to support different target hardware. If this is the ultimate destination, the video uploaded to Oculus should have a higher bitrate than you would normally want to use, as they handle re encoding and bitrates automatically.

This format also works on the quest natively by loading the video file directly onto the device! Follow these instructions to copy the video to your device.

Note: these instructions mention the Oculus Video app, but it is now called Oculus TV.

Note: while the Quest does support H265 with 10 bit color via sideloading, Oculus Media Studio does not properly handle 10 bit color in the streamed versions, and will result in glitched videos on Quest 2. Strangley, Quest 1 plays back 10 Bit videos from Oculus Media Studio just fine.

https://twitter.com/ID_AA_Carmack/status/1400543710388097028?s=20

Experimental → FB360 Matroska (Spatial Workstation 8 Channel)

Use this for AVPro 360 playback compatibility.

https://www.renderheads.com/content/docs/AVProVideo/articles/feature-360audio.html

The documentation makes it sound like other formats are possible to use, but in practice, this is the only supported format where the spatialized audio comes through properly.

Updated 10 months ago